Identity Broker Forum

Welcome to the community forum for Identity Broker.

Browse the knowledge base, ask questions directly to the product group, or leverage the community to get answers. Leave ideas for new features and vote for the features or bug fixes you want most.

Managing the Broker Service restart

Managing the Broker Service restart

In troubleshooting SAP connectivity I am repeatedly making changes to the Unify.Service.Connect.exe.config file and restarting Broker - but being careful not to start until the existing process has completely stopped - which usually takes about 30 seconds in my environment.

I got tired of doing this manually and wrote the following simple script - which must be run from an Administrator PS session:

cls

[bool]$isRunning = [bool](Get-Process "Unify.Service.Connect" -ErrorAction SilentlyContinue)

if ($isRunning) {

net stop service.connect

}

[int]$counter = 0

while ($isRunning) {

$counter++

Write-Host "Waiting [$counter] seconds for service to shut down ..."

Start-Sleep 1

$isRunning = [bool](Get-Process "Unify.Service.Connect" -ErrorAction SilentlyContinue)

}

net start service.connect

I hope others find this useful too!

Hey Bob,

Thanks for that script - very helpful.

The other option that you have is using the Unify.Service.Connect.Debug.exe file (and associated config) when you're attempting to develop. The files are identical OOTB, the debug.exe just runs the 'service' as a console app instead of as a service.

The behaviour should be identical, but saves you having to start and stop the service each time you want to make a change. You can simply close the console app, make your changes in the debug.exe.config file, then start it up again and see how it goes. Once you've got your changes working, you can migrate them over to the normal exe.config file for ongoing use. This may make development to that file simpler, but the above script is definitely useful for minor changes.

New Aderant Expert connector failing to clear HPPhoneNumber attribute

New Aderant Expert connector failing to clear HPPhoneNumber attribute

MIM has around 940 pending export deletes for HPPhoneNumber attributes, and they are being processed successfully by UNIFYBroker and the Aderant Expert connector (i.e. no export errors). However, during a subsequent Full Import by MIM that attribute is being restored to the non-NULL value, meaning that the next Full Sync results in each of those objects having a new pending export, ad infinitum.

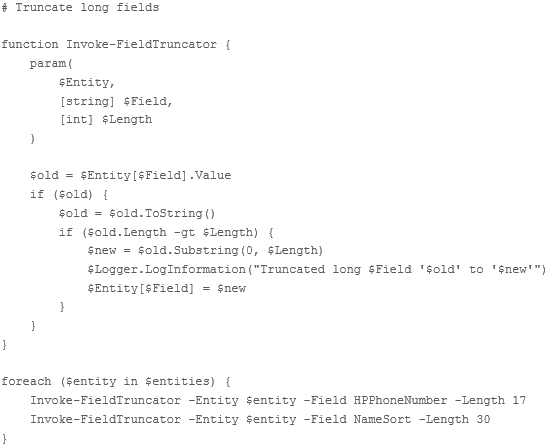

The latest version of the Aderant Expert connector no longer truncates long attribute values; PowerShell transform has been written to prototype a solution in DEV but will need to be ported into the C# connector

The latest version of the Aderant Expert connector no longer truncates long attribute values; PowerShell transform has been written to prototype a solution in DEV but will need to be ported into the C# connector

Here's the PowerShell transform that was required (so it can be ported to C#):

# Truncate long fields

function Invoke-FieldTruncator {

param(

$Entity,

[string] $Field,

[int] $Length

)

$old = $Entity[$Field].Value

if ($old) {

$old = $old.ToString()

if ($old.Length -gt $Length) {

$new = $old.Substring(0, $Length)

$Logger.LogInformation("Truncated long $Field '$old' to '$new'")

$Entity[$Field] = $new

}

}

}

foreach ($entity in $entities) {

Invoke-FieldTruncator -Entity $entity -Field HPPhoneNumber -Length 17

Invoke-FieldTruncator -Entity $entity -Field NameSort -Length 30

}

Note that these are only the fields that had long data which needed truncation in the DEV environment. When we move to UAT and PROD it is likely there will be other fields with long data that needs to be truncated. A lot of time and debugging effort was expended by the consultant (me) to identify and remediate these two fields in DEV, and this will add to the time required for UAT and PROD deployments. The extra time required will be a particular issue when we come to PROD as it will significantly increase the deployment time during which time the system will be down for the customer.

As a consequence, I suggest that all fields be truncated to their maximum database lengths, not just those listed in the DEV workaround above.

Aderant Expert connector fails with "The transaction associated with the current connection has completed but has not been disposed" after a previous SQL timeout failure

Aderant Expert connector fails with "The transaction associated with the current connection has completed but has not been disposed" after a previous SQL timeout failure

After a SQL timeout it appears the SQL connection to Aderant Expert remains with a SQL transaction that has not been disposed. The error is:

20191203,23:22:24,UNIFYBroker,Connector,Warning,"Update entities to connector failed.

Update entities [Count:2071] to connector Global Aderant Expert Connector failed with reason The transaction associated with the current connection has completed but has not been disposed. The transaction must be disposed before the connection can be used to execute SQL statements.. Duration: 00:01:01.0504286

The previous timeout error responsible for the undisposed transaction is:

20191203,23:16:16,UNIFYBroker,Connector,Warning,"Update entities to connector failed.

System.Data.SqlClient.SqlException (0x80131904): Execution Timeout Expired. The timeout period elapsed prior to completion of the operation or the server is not responding. ---> System.ComponentModel.Win32Exception (0x80004005): The wait operation timed out

Update entities [Count:2071] to connector Global Aderant Expert Connector failed with reason Execution Timeout Expired. The timeout period elapsed prior to completion of the operation or the server is not responding.. Duration: 00:01:16.0479697

The workaround is to restart the UNIFYBroker service, to stop it reusing the bad SQL connection.

See also https://voice.unifysolutions.net/communities/6/topics/3995-aderant-expert-agent-ui-doesnt-save-changes-to-the-operation-timeout-parameter for more information about SQL operation timeouts on a Global Aderant Expert connector.

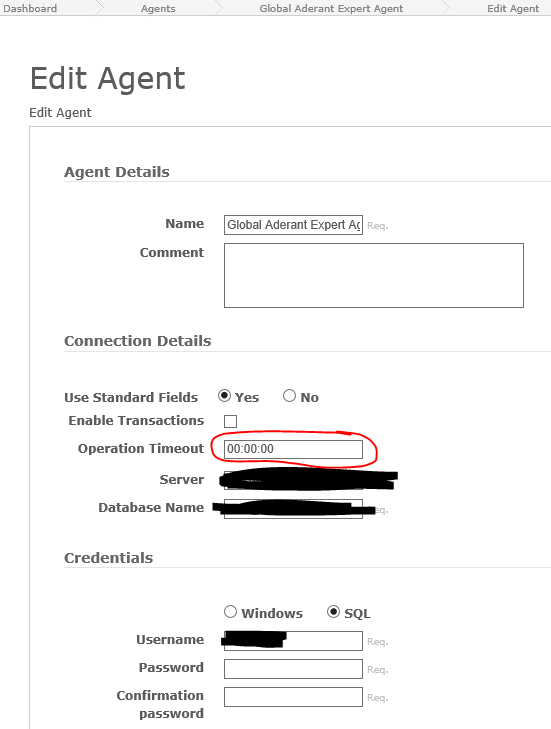

Aderant Expert agent UI doesn't save changes to the Operation Timeout parameter

Aderant Expert agent UI doesn't save changes to the Operation Timeout parameter

After changing and saving the "Operation Timeout" parameter on the Aderant Expert agent UI the value is not saved. In the extensibility file, the value "PT0S" is always written.

As a workaround I have edited the extensibility file instead.

LDAP search on multivalue attribute returns incorrect data

LDAP search on multivalue attribute returns incorrect data

The following shows a search result which incorrectly returns all org unit records where none of the inspected results actually matched the search criteria:

***Searching...

ldap_search_s(ld, "OU=orgUnits,DC=IdentityBroker", 2, "(hierarchy=50002000)", attrList, 0, &msg)

Matched DNs: OU=orgUnits,DC=IdentityBroker

Getting 1000 entries:

Dn: CN=50022695,OU=orgUnits,DC=IdentityBroker

costCentre: 0000045240;

costCentreGroup: CCCA;

costCentreID: d567d5b9d344523618fc25cc2efe70e7;

costCentreIDRef: CN=0000045240,CN=CCCA,OU=costCentres,DC=IdentityBroker;

costCentreText: International Climate Law;

createTimestamp: 30/11/2019 1:36:03 PM AUS Eastern Daylight Time;

distinguishedName: CN=50022695,OU=orgUnits,DC=IdentityBroker;

entryUUID: 08e5d654-d8a2-4b41-a516-00bd457a93b9;

EXTOBJID: 50022695;

hashDN: 60451482495227d7d95601c180bcceac;

hierarchy (7): 50000100; 50000500; 50022601; 50022677; 50022687; 50022692; 50022695;

hierarchyRef (7): CN=50000100,OU=orgUnits,DC=IdentityBroker; CN=50000500,OU=orgUnits,DC=IdentityBroker; CN=50022601,OU=orgUnits,DC=IdentityBroker; CN=50022677,OU=orgUnits,DC=IdentityBroker; CN=50022687,OU=orgUnits,DC=IdentityBroker; CN=50022692,OU=orgUnits,DC=IdentityBroker; CN=50022695,OU=orgUnits,DC=IdentityBroker;

longText: International Climate Law Section;

modifyTimestamp: 2/12/2019 10:23:42 PM AUS Eastern Daylight Time;

objectClass: orgUnit;

objectEndDate: 99991231000000.000Z;

objectID: 50022695;

objectStartDate: 20071203000000.000Z;

OBJECTTYPE: O;

orgLevel: 7;

orgLevelName: Section;

OU: orgUnits;

parentOrgID: 50022692;

parentOrgIDRef: CN=50022692,OU=orgUnits,DC=IdentityBroker;

PLANSTAT: 1;

PLANVERS: 01;

shortText: DCC ID;

status: Active;

subschemaSubentry: CN=orgUnits,cn=schema;

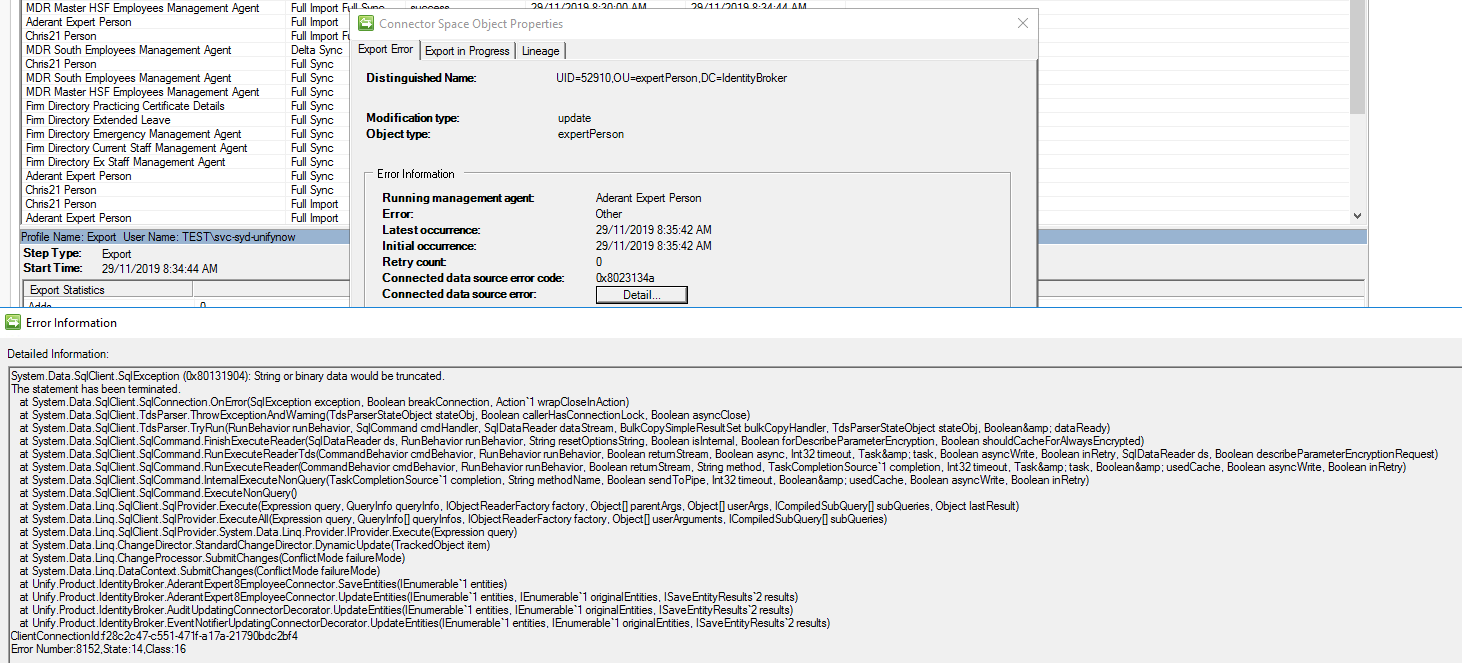

Aderant Expert MA 'string or binary data would be truncated' error on export

Aderant Expert MA 'string or binary data would be truncated' error on export

Using the new version of the Aderant Expert connector I'm seeing this error. This is the first time I've attempted an export with the new connector. The configuration is a migration of the old version of the connector, talking to a database which is a copy of the one used by the old version of the connector.

UNIFYBroker v5.3.2 Revision #0

Aderant Expert Connector 5.3.1.1

Chris21 Connector 5.3.0.0

Could you please assist in working out what's wrong?

That means there's a field in the Aderant database with a length limit, and you're trying to write a value to it that exceeds that limit.

Full import returns only root node

Full import returns only root node

When running a FULL IMPORT on an IdB5.3.2 implentation I am getting data returned from only 2 of the 7 configured partitions - yet data is clearly visible for each of them via LDP, making me suspect an issue with the Broker for MIM component. I have tried deleting and recreating run profiles, refreshing schema, reloading interfaces, and even creating a new instance of the MA - but still the same result.

There are no exceptions being logged for the full import (currently in VERBOSE mode). As an example

- The AREA adapter correctly returns 11 records, including the root container node (although the Total Entities count incorrectly shows as 0 on the Import job counter)

- The COMPANIES adapter returns 1 record, being the container node only - despite all objects appearing correctly via LDAP.EXE.

Can I please have some urgent assistance to determine the root cause?

Concurrency in UNIFYBroker

Concurrency in UNIFYBroker

Hi Guys,

Couldn't find an existing ticket or knowledge ticket about this so I though I would start one.

In the past I have design schedules and exclusion groups around the idea that you could not import from an adapter and do an import on the relative connector at the same time as it would cause sluggishness within Broker. Additionally, reading from an adapter while UNIFYBroker is committing changes will also cause some sort of locking (whether it locks up entirely or whether it just take long while doing so).

So I was hoping you could tell me about how UNIFYBroker handles concurrency. More specifically what operations it can do at the same time. Eg:

- Can you import from two connectors at the same time (both if its a one to one adapter relationship or a many to one)?

- Can you import from the an adapter while an import is being run on the respective connector

- Can you do a import all and a delta import at the same time without anything locking up (not that I do this, but it happens from time to time)?

If you can let me know of any operations that couldn't be run at the same time that would be great, as it would be good to define a concrete way to schedule UNIFYBroker operations.

Thanks

Hi Hayden,

Broker can handle running connector imports, reflecting changes into adapters, and reading and writing adapter entities via a gateway concurrently. The only scenario Broker won't allow is doing two imports (ie full and delta) at the same time on the same connector.

That said, yes, this can cause these tasks to take longer to complete when multiple operations are competing for cpu and disk resources. Scheduling various operations might be a good strategy to improve performance, especially on machines which fall below the recommended system requirements and/or where system resources are shared between Broker, the database and other services, but it isn't something you need to always do.

OU case renames not working

OU case renames not working

When I changed the case of an OU name in an adapter from OrgUnits to orgUnits, the data returned subsequently over the LDAP interface was not adjusted accordingly. I believe I tried each of the following without success (but you may want to prove this for yourself):

- restarting the IdB service

- deleting the adapter and reloading it

- deleting the connector and adapter data and reloading them both

Eventually I had to go into the Broker SQL table (container names I think it was), locate the offending record, and update it there.

This has been implemented and is available in the release of UNIFYConnect V6, which will be made available shortly.

Customer support service by UserEcho