Identity Broker Forum

Welcome to the community forum for Identity Broker.

Browse the knowledge base, ask questions directly to the product group, or leverage the community to get answers. Leave ideas for new features and vote for the features or bug fixes you want most.

Baseline Sync to revert target system changes takes a long time, even when there are no actual changes

Baseline Sync to revert target system changes takes a long time, even when there are no actual changes

My customer has 9,000 users in AD corresponding to 7,000 in a locker of which approximately 4,000 are joined (the discrepancy being due to terminated HR users who don't have or need to be provisioned with AD accounts).

When I run a Baseline Sync on my AD link, to revert any unauthorised changes that have been made to users in AD (i.e., disable any accounts that have been manually enabled when they shouldn't have) it takes upwards of 30 minutes to run, during which time all other system processing is blocked (since link operations are blocking). This doesn't meet customer expectations that unauthorised AD changes should be reverted within a 15-30 minute timeframe and that the system should always process low-latency operations (like manual suspensions using the Suspended Employees feature) with a delay not exceeding a few minutes.

Some possible ways to solve this problem would be to add a "true-up" operation, or allow parallel sync operations.

This has been implemented and is available in the release of UNIFYConnect V6, which will be made available shortly.

Pending Export Report capability required to target directory

Pending Export Report capability required to target directory

There is already a Test Mode concept but this appears to be limited when it comes to providing a pre-execution reporting mechanism for ANY pending change to a target system.

Existing MIM Best Practice has incorporated this capability for many years, and the equivalent is now required in the Broker+ and UNIFYConnect platforms

Pending Exports capability can now be achieved using the Channels feature available with UNIFYConnect v7.0.195159

Test harness for Adapter and Link PowerShell Transformations

Test harness for Adapter and Link PowerShell Transformations

In order to support the unit testing requirements for transitioning PS solutions on Broker+ to the UNIFYConnect hosted platform, a test harness is required for all PowerShell transformations.

Locker Field Search "Is-Null" True or False returns HTTP 500 Error

Locker Field Search "Is-Null" True or False returns HTTP 500 Error

We are unable to search the locker using the "Is-Null" Search term. This issue occurs across all environments that I have checked so far.

This search terms works well in Connectors and Adaptors and would be extremely useful for lockers as well.

Any attempt to use the Is-Null Search term in a locker returns the following error:

System.AggregateException: One or more errors occurred. ---> Unify.Framework.Client.SwaggerException: The HTTP status code of the response was not expected (500).

at Unify.Connect.Web.Client.LockerEntityClient.<SearchEntitiesAsync>d__11.MoveNext()

--- End of stack trace from previous location where exception was thrown ---

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

at Unify.Connect.Web.Client.ProfiledLockerEntityClient.<SearchEntitiesAsync>d__4.MoveNext()

--- End of inner exception stack trace ---

at System.Threading.Tasks.Task`1.GetResultCore(Boolean waitCompletionNotification)

at Unify.Connect.Web.LockerController.InnerRetrievalToResults[TResult](EntityRetrievalInformation`1 retrievalInformation, Func`3 getResults)

at Unify.Connect.Web.LockerController.LockerEntities(EntityRetrievalInformation`1 information)

at Unify.Connect.Web.LockerController.<LockerEntityData>d__65.MoveNext()

--- End of stack trace from previous location where exception was thrown ---

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

at lambda_method(Closure , Task )

at System.Web.Mvc.Async.TaskAsyncActionDescriptor.EndExecute(IAsyncResult asyncResult)

at System.Web.Mvc.Async.AsyncControllerActionInvoker.<>c__DisplayClass34.<BeginInvokeAsynchronousActionMethod>b__33(IAsyncResult asyncResult)

at System.Web.Mvc.Async.AsyncControllerActionInvoker.EndInvokeActionMethod(IAsyncResult asyncResult)

at System.Web.Mvc.Async.AsyncControllerActionInvoker.AsyncInvocationWithFilters.<InvokeActionMethodFilterAsynchronouslyRecursive>b__3c()

at System.Web.Mvc.Async.AsyncControllerActionInvoker.AsyncInvocationWithFilters.<>c__DisplayClass45.<InvokeActionMethodFilterAsynchronouslyRecursive>b__3e()

at System.Web.Mvc.Async.AsyncControllerActionInvoker.EndInvokeActionMethodWithFilters(IAsyncResult asyncResult)

at System.Web.Mvc.Async.AsyncControllerActionInvoker.<>c__DisplayClass1e.<>c__DisplayClass28.<BeginInvokeAction>b__19()

at System.Web.Mvc.Async.AsyncControllerActionInvoker.<>c__DisplayClass1e.<BeginInvokeAction>b__1b(IAsyncResult asyncResult)

---> (Inner Exception #0) HTTP Response: {"Message":"An error has occurred.","ExceptionMessage":"Illegal operator '==' at 'Unify.Product.IdentityBroker.FilterInformation'. The supported operators are: 'true, false'.","ExceptionType":"System.NotSupportedException","StackTrace":" at Unify.Connect.Web.IdentifierEntitySearchUtilityBase`2.GenerateSearchFunction(FilterInformation searchInformation, Lazy`1 schema)\r\n at Unify.Product.IdentityBroker.DefaultEntityControllerFilterCondition`1.Apply(FilterInformation information, IEnumerable`1 entities)\r\n at Unify.Product.IdentityBroker.EntityControllerSearchFilter`2.ApplyFilter(IEnumerable`1 entities, FilterInformation filterInformation, Guid partitionId)\r\n at Unify.Product.IdentityBroker.EntityControllerSearchFilter`2.<>c__DisplayClass7_0.<ApplyFilter>b__0(IEnumerable`1 filtered, FilterInformation filter)\r\n at System.Linq.Enumerable.Aggregate[TSource,TAccumulate](IEnumerable`1 source, TAccumulate seed, Func`3 func)\r\n at Unify.Product.IdentityBroker.EntityControllerSearchFilter`2.ApplySearch(SearchInformation searchInformation, IEnumerable`1 entities, Guid partitionId)\r\n at Unify.Product.Plus.LockerEntityController.SearchEntities(Guid partitionId, SearchInformation searchInformation)\r\n at lambda_method(Closure , Object , Object[] )\r\n at System.Web.Http.Controllers.ReflectedHttpActionDescriptor.ActionExecutor.<>c__DisplayClass10.<GetExecutor>b__9(Object instance, Object[] methodParameters)\r\n at System.Web.Http.Controllers.ReflectedHttpActionDescriptor.ExecuteAsync(HttpControllerContext controllerContext, IDictionary`2 arguments, CancellationToken cancellationToken)\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Controllers.ApiControllerActionInvoker.<InvokeActionAsyncCore>d__0.MoveNext()\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Controllers.ActionFilterResult.<ExecuteAsync>d__2.MoveNext()\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Filters.AuthorizationFilterAttribute.<ExecuteAuthorizationFilterAsyncCore>d__2.MoveNext()\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Dispatcher.HttpControllerDispatcher.<SendAsync>d__1.MoveNext()"} Unify.Framework.Client.SwaggerException: The HTTP status code of the response was not expected (500).

at Unify.Connect.Web.Client.LockerEntityClient.<SearchEntitiesAsync>d__11.MoveNext()

--- End of stack trace from previous location where exception was thrown ---

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

at Unify.Connect.Web.Client.ProfiledLockerEntityClient.<SearchEntitiesAsync>d__4.MoveNext()<---

Closing as a patch has been provided for 5.3, and was rolled into all UNIFYConnect Cloud deployments.

UNIFYConnect update threshold/safety catch

UNIFYConnect update threshold/safety catch

A UNIFYConnect customer has requested the ability to implement a safety catch feature to stop updates if they are over a certain threshold. I know that Broker/BrokerPlus has a safety catch feature for entity deletion thresholds, but does anything currently exist for updates? Ideally this would be a check that if the number of changes on a link are over X amount it stops the sync, disables the schedules for that link, and then throws an error in the logs to be picked up via the monitoring/alerting.

The only topic I could find on this at the moment is Safety Catch Feature / UNIFYBroker Forum / UNIFY Solutions.

Update Threshold / Safety Catch now available with Channels feature on UNIFYConnect v7.0.195159

Attribute changes don't trigger pending sync changes on a link if they are processed in a powershell task on the link

Attribute changes don't trigger pending sync changes on a link if they are processed in a powershell task on the link

I have noticed that if a link has a PowerShell task on an outgoing sync task that modifies and sets attributes in the target, then a change flows through to only that attribute modified in the PowerShell task, then the link doesn't pick it up as pending sync change. Only running a baseline sync on the link would trigger the PowerShelltask to change/modify that attribute.

Discussion was had with Matt Davis on the possibility of using the Register-Contribution function on a link for the attributes that are being modified through the PowerShell task to then recognise changes for those entities. However it is unsure whether the Register-Contribution function is available for links or not. As an alternative, the PowerShell script can be used on the adapter in a reverse transform, which triggers on outgoing sync changes from a link to a connector. Then the contributing attributes can be sync as direct mappings in the link.

This has been implemented and is available in the release of UNIFYConnect V6, which will be made available shortly.

"Process unmapped changes" can be used to resolve this in the new software version, which will trigger changes for all entity fields rather than just those with direct mappings.

Alternatively add a direct mapping for the field and override with powershell sync logic.

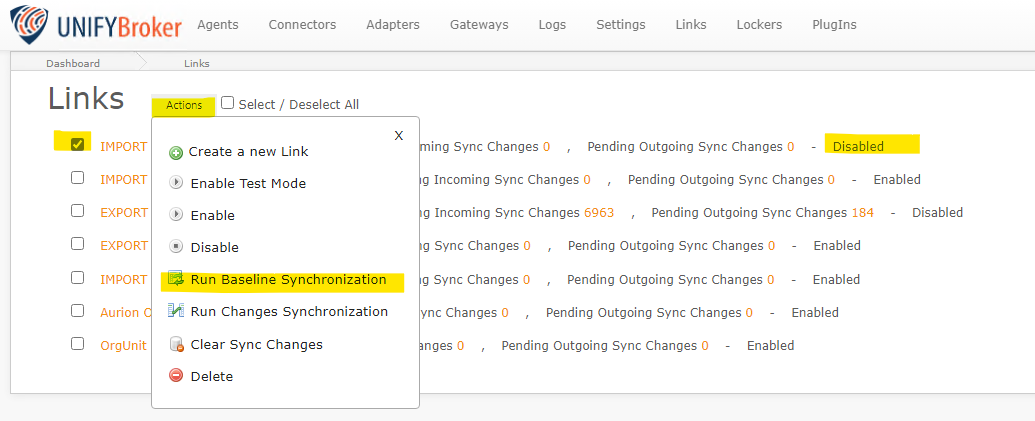

Link Baseline sync still able to be run from Links page when link is disabled.

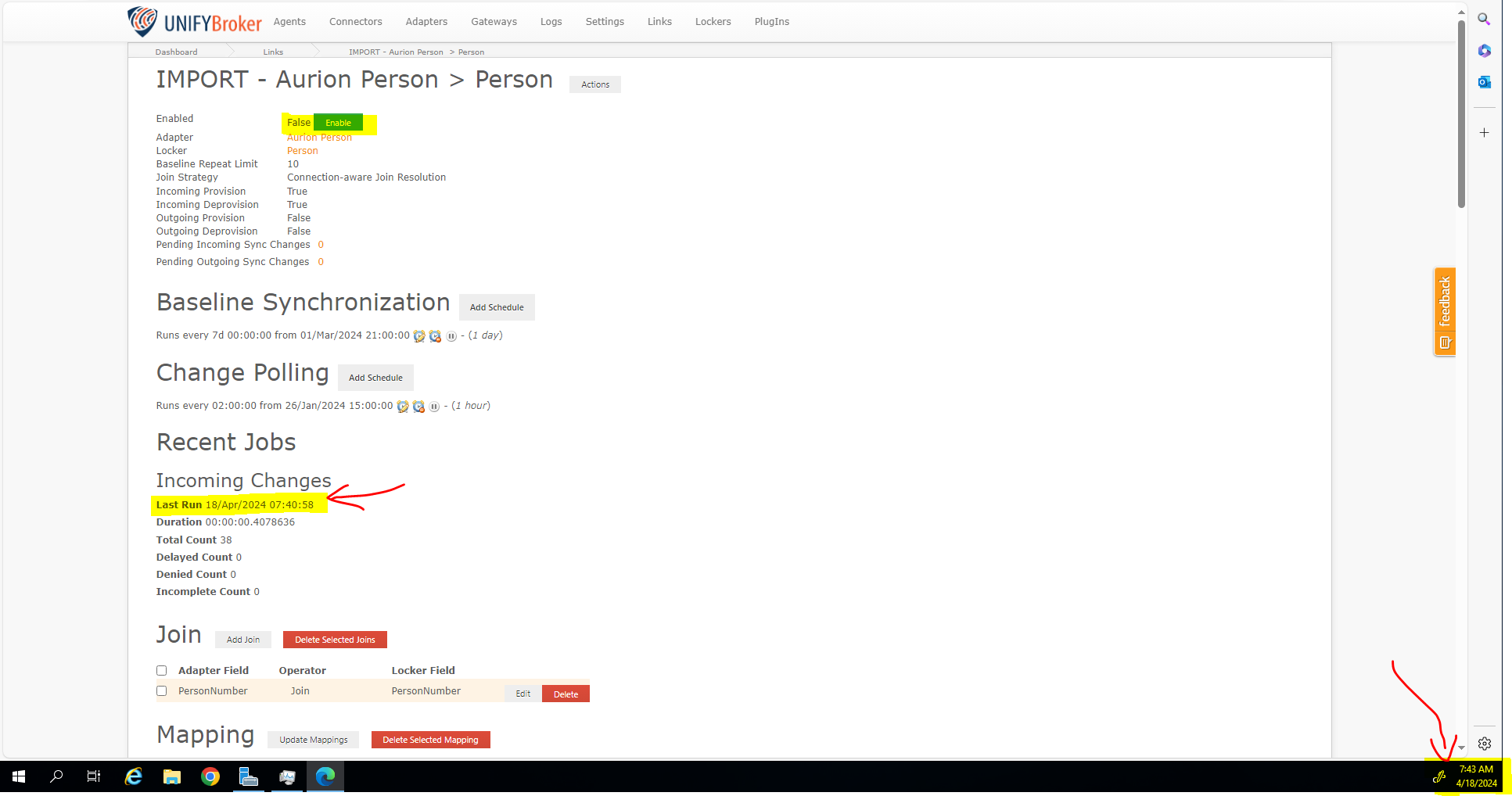

Link Baseline sync still able to be run from Links page when link is disabled.

I have noticed that under normal operation, a baseline synchronization task on a link cannot be executed while the link is disabled. In the link UI, the option to run a baseline sync only becomes visible when the link is enabled. However I have found that on the Links page (that lists the links in the solution) selecting a disabled link and running a baseline sync through the Actions button at the top of the page still executes the baseline sync on the disabled link. Not sure if this is expected behaviour or is a bug.

Screenshot #1: Running a baseline sync task on a disabled link through the Actions button:

Screenshot #2: The baseline sync still executed even though the link is disabled:

This has been implemented and is available in the release of UNIFYConnect V6, which will be made available shortly.

Button to allow UI deletion of locker entities

Button to allow UI deletion of locker entities

When a locker has inbound provisioning but not inbound deprovisioning it is sometimes desirable to manually delete an entity which is no longer wanted. A button to do this would be helpful, because the current workaround is to suspend all outbound processing, delete all locker entities and perform a complete data reload.

This has been implemented and is available in the release of UNIFYConnect V6, which will be made available shortly.

Locker entities should not retain attribute values that came from joins that have been removed

Locker entities should not retain attribute values that came from joins that have been removed

After a link join is removed using the UI a locker entity retains attribute values that were previously contributed through the link. This outcome is highly undesirable, since the locker now has information that should not be there (in my case an AD samaccountname value which is misleading, since the the AD user's adapter entity join was removed in order to allow the adapter entity to rejoin to a different locker record, but now both locker records have the same samaccountname value!) There is no way to initiate removal of this out-of-date attribute data from the locker other than joining the locker to a different adapter entity (which may not be feasible) or performing a complete data reload from scratch (which is time-consuming and an outage). Running a baseline sync on the link does not fix the problem. The attribute values on the entity should be updated appropriately when the join is removed.

This has been implemented and is available in the release of UNIFYConnect V6, which will be made available shortly.

Renaming a locker field results in "An item with the same key has already been added" UI error

Renaming a locker field results in "An item with the same key has already been added" UI error

I renamed a locker field "HRISEmailAddress" to "EmailAddress" in the UNIFYBroker UI, and this stack dump error appeared:

System.Exception: Swagger Exception could not be parsed. SE response code: 500; SE response text: {"Message":"An error has occurred.","ExceptionMessage":"'The field EmailAddress could not be added to the schema","ExceptionType":"Unify.Framework.Schema.SchemaException","StackTrace":" at Unify.Framework.Schema.Schema`6.Add(TKey key, TFieldDef value)\r\n at Unify.Framework.Visitor.Visit[T](IEnumerable`1 visitCollection, Action`2 visitor)\r\n at Unify.Product.IdentityBroker.EntitySchemaFactory.CreateComponent(IEntitySchemaConfiguration factoryInformation)\r\n at Unify.Product.Plus.LockerEngine.GenerateLockerPair(ILockerInformation lockerConfiguration)\r\n at Unify.Product.Plus.LockerEngine.LockerConfigurationChanged(Guid lockerId, Action`1 lockerAction)\r\n at Unify.Product.Plus.LockerEngine.<>c__DisplayClass33_0.<UpdateLockerSchemaRow>b__0()\r\n at Unify.Product.Plus.LockerEngine.<>c__DisplayClass49_0.<ConfigurationChanged>b__0()\r\n at Unify.Framework.ExtensionMethods.WaitOnMutex(Mutex mutex, Action work)\r\n at Unify.Product.Plus.LockerEngineAuditingDecorator.UpdateLockerSchemaRow(Guid lockerId, IEntitySchemaFieldDefinitionConfiguration entitySchemaRowConfiguration)\r\n at Unify.Product.Plus.LockerEngineNotifierDecorator.<>c__DisplayClass25_0.<UpdateLockerSchemaRow>b__0()\r\n at Unify.Framework.Notification.NotifierDecoratorBase.Notify(ITaskNotificationFactory notificationFactory, Action action)\r\n at lambda_method(Closure , Object , Object[] )\r\n at System.Web.Http.Controllers.ReflectedHttpActionDescriptor.ActionExecutor.<>c__DisplayClassc.<GetExecutor>b__6(Object instance, Object[] methodParameters)\r\n at System.Web.Http.Controllers.ReflectedHttpActionDescriptor.ExecuteAsync(HttpControllerContext controllerContext, IDictionary`2 arguments, CancellationToken cancellationToken)\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Controllers.ApiControllerActionInvoker.<InvokeActionAsyncCore>d__0.MoveNext()\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Controllers.ActionFilterResult.<ExecuteAsync>d__2.MoveNext()\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Filters.AuthorizationFilterAttribute.<ExecuteAuthorizationFilterAsyncCore>d__2.MoveNext()\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Filters.AuthorizationFilterAttribute.<ExecuteAuthorizationFilterAsyncCore>d__2.MoveNext()\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Dispatcher.HttpControllerDispatcher.<SendAsync>d__1.MoveNext()","InnerException":{"Message":"An error has occurred.","ExceptionMessage":"An item with the same key has already been added.","ExceptionType":"System.ArgumentException","StackTrace":" at System.ThrowHelper.ThrowArgumentException(ExceptionResource resource)\r\n at System.Collections.Generic.Dictionary`2.Insert(TKey key, TValue value, Boolean add)\r\n at Unify.Framework.Schema.Schema`6.Add(TKey key, TFieldDef value)"}}; ---> Unify.Framework.Client.SwaggerException: The HTTP status code of the response was not expected (500).

When I tried to get back to the main locker UI page to select the affected locker and fix my mistake the following error now appears every time:

System.ArgumentException: An item with the same key has already been added.

Could you please review the config and fix it so the locker screen works again?

Hi Adrian

The config hasn't been changed; it's just the in-memory configuration that's in a a bad state. If you have access to the Broker API for that environment you can use the Locker/UpdateLockerSchemaRowName methods to manually change the problematic fields name. This method will need the row id, which can be retrieved using the Locker/GetLockerConfiguration method. Alternatively, restarting the service will reload the still-correct configuration from file.

Customer support service by UserEcho