The latest version of the Aderant Expert connector no longer truncates long attribute values; PowerShell transform has been written to prototype a solution in DEV but will need to be ported into the C# connector

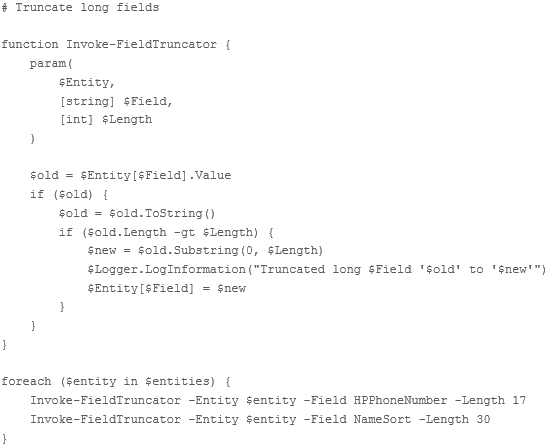

Here's the PowerShell transform that was required (so it can be ported to C#):

# Truncate long fields

function Invoke-FieldTruncator {

param(

$Entity,

[string] $Field,

[int] $Length

)

$old = $Entity[$Field].Value

if ($old) {

$old = $old.ToString()

if ($old.Length -gt $Length) {

$new = $old.Substring(0, $Length)

$Logger.LogInformation("Truncated long $Field '$old' to '$new'")

$Entity[$Field] = $new

}

}

}

foreach ($entity in $entities) {

Invoke-FieldTruncator -Entity $entity -Field HPPhoneNumber -Length 17

Invoke-FieldTruncator -Entity $entity -Field NameSort -Length 30

}

Note that these are only the fields that had long data which needed truncation in the DEV environment. When we move to UAT and PROD it is likely there will be other fields with long data that needs to be truncated. A lot of time and debugging effort was expended by the consultant (me) to identify and remediate these two fields in DEV, and this will add to the time required for UAT and PROD deployments. The extra time required will be a particular issue when we come to PROD as it will significantly increase the deployment time during which time the system will be down for the customer.

As a consequence, I suggest that all fields be truncated to their maximum database lengths, not just those listed in the DEV workaround above.

Customer support service by UserEcho

Further investigation shows that truncating values on SQL write causes MIM to queue another update when it re-reads the truncated values.

I think the old connector must have updating records in a non-batch manner, and cycling those with long attributes over and over due to the truncated values. The new version fails the entire SQL batch instead - hence the issue is much more obvious as one long attribute affects all records, not just that record.

Hi Adrien,

The old connector did not ever truncate values, and neither will the current one. It won't work as it goes against the design philosophy of connectors and Broker as a whole. Making arbitrary decisions on data is not the place of a connector, it's the responsibility of the implementation and Broker provides the tools to do this. If things worked in the past then the difference lies with either the data and/or the database schema.

To assist with your current issues, I'd recommend configuring maximum length limits on any fields you believe to be at risk of containing oversized strings. This will cause the MIM export to fail if this limit is exceeded and warnings output to the Broker log detailing which entity, field and value has caused the failure. This would have been useful to us if I'd remembered this feature existed, and that it worked on both imports and exports, last week, but you can start using it now.

To resolve the issue, however, will required you to correct the invalid data in some way. Whether this means filtering entities, fixing the source data, PowerShell transformations or something else, it will have to be determined by you for your implementation.Also, regarding truncations in PowerShell transformations, I'd recommend not blindly truncating to max allowed length. It's a quick and dirty fix that looks like one, and Expert may not always be happy getting (what it may consider) poorly formed data injected into its database.

Hi Beau,

I agree - it was simplistic and incorrect of me to think that the old connector was truncating data. The new connector's differing behaviour appears to be failing the whole export from MIM (i.e. all records show an error) where the old one seemingly didn't show any error. Do you have an idea why that might be? Are you using a different SQL library or something like that?

I will definitely configure maximum field lengths, now that I know about that feature. Thank you for that. Will that result in the whole MIM export being rejected, or just the objects where the configured maximum field lengths are exceeded? If the latter than I can remove my PowerShell transform to more easily highlight just the problematical records.

Normally this wouldn't be an issue with a new build or functionality rework, but in this case all we are doing is a FIM to MIM migration and we must minimise the changes we make to the solution.

Excellent!

And this is even better! :-)

Thank you very much.

Nice. To answer your other question, there isn't much different mechanically between the old and new connector. It's mostly database definition and schema changes. The only reasons I can think of why this wasn't a problem before would be that either the data didn't contain values which exceeded any field length limits or the database schema field lengths limits much higher and were reduced in a schema update. There could be some database configuration that changes the behaviour for handling string limits that may have changed, but that's only speculation on my part.

Fair enough. It's exactly the same upstream source, and a SQL backup/restore copy of the same downstream Expert database that is running just fine in FIM/IDB4, so I can't see an explanation either. Regardless, I have a way forward now so it's all good.