Identity Broker Forum

Welcome to the community forum for Identity Broker.

Browse the knowledge base, ask questions directly to the product group, or leverage the community to get answers. Leave ideas for new features and vote for the features or bug fixes you want most.

Connector schema attribute settings are reflected to the adapter in a join transformation

Connector schema attribute settings are reflected to the adapter in a join transformation

Hi Gents,

Raising this in regards to an issue experienced recently at DCCEEW.

An adapter that is primarily used to provision out to a target system was modified with a join transform to include an additional attribute from another connector. This attribute happened to be the key field for the connector it was sourced from and naturally configured as a required field in the connector schema. After the join transform was applied the attribute was added to the adapter schema, but in addition the required field status was also reflected on the adapter.

As this was a mapped field, and not included in the attributes being exported through the adapter, this caused exports to fail with a schema validation error.

Attributes mapped via a join transform should not be set as required on the adapter schema.

Locker Field Search "Is-Null" True or False returns HTTP 500 Error

Locker Field Search "Is-Null" True or False returns HTTP 500 Error

We are unable to search the locker using the "Is-Null" Search term. This issue occurs across all environments that I have checked so far.

This search terms works well in Connectors and Adaptors and would be extremely useful for lockers as well.

Any attempt to use the Is-Null Search term in a locker returns the following error:

System.AggregateException: One or more errors occurred. ---> Unify.Framework.Client.SwaggerException: The HTTP status code of the response was not expected (500).

at Unify.Connect.Web.Client.LockerEntityClient.<SearchEntitiesAsync>d__11.MoveNext()

--- End of stack trace from previous location where exception was thrown ---

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

at Unify.Connect.Web.Client.ProfiledLockerEntityClient.<SearchEntitiesAsync>d__4.MoveNext()

--- End of inner exception stack trace ---

at System.Threading.Tasks.Task`1.GetResultCore(Boolean waitCompletionNotification)

at Unify.Connect.Web.LockerController.InnerRetrievalToResults[TResult](EntityRetrievalInformation`1 retrievalInformation, Func`3 getResults)

at Unify.Connect.Web.LockerController.LockerEntities(EntityRetrievalInformation`1 information)

at Unify.Connect.Web.LockerController.<LockerEntityData>d__65.MoveNext()

--- End of stack trace from previous location where exception was thrown ---

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

at lambda_method(Closure , Task )

at System.Web.Mvc.Async.TaskAsyncActionDescriptor.EndExecute(IAsyncResult asyncResult)

at System.Web.Mvc.Async.AsyncControllerActionInvoker.<>c__DisplayClass34.<BeginInvokeAsynchronousActionMethod>b__33(IAsyncResult asyncResult)

at System.Web.Mvc.Async.AsyncControllerActionInvoker.EndInvokeActionMethod(IAsyncResult asyncResult)

at System.Web.Mvc.Async.AsyncControllerActionInvoker.AsyncInvocationWithFilters.<InvokeActionMethodFilterAsynchronouslyRecursive>b__3c()

at System.Web.Mvc.Async.AsyncControllerActionInvoker.AsyncInvocationWithFilters.<>c__DisplayClass45.<InvokeActionMethodFilterAsynchronouslyRecursive>b__3e()

at System.Web.Mvc.Async.AsyncControllerActionInvoker.EndInvokeActionMethodWithFilters(IAsyncResult asyncResult)

at System.Web.Mvc.Async.AsyncControllerActionInvoker.<>c__DisplayClass1e.<>c__DisplayClass28.<BeginInvokeAction>b__19()

at System.Web.Mvc.Async.AsyncControllerActionInvoker.<>c__DisplayClass1e.<BeginInvokeAction>b__1b(IAsyncResult asyncResult)

---> (Inner Exception #0) HTTP Response: {"Message":"An error has occurred.","ExceptionMessage":"Illegal operator '==' at 'Unify.Product.IdentityBroker.FilterInformation'. The supported operators are: 'true, false'.","ExceptionType":"System.NotSupportedException","StackTrace":" at Unify.Connect.Web.IdentifierEntitySearchUtilityBase`2.GenerateSearchFunction(FilterInformation searchInformation, Lazy`1 schema)\r\n at Unify.Product.IdentityBroker.DefaultEntityControllerFilterCondition`1.Apply(FilterInformation information, IEnumerable`1 entities)\r\n at Unify.Product.IdentityBroker.EntityControllerSearchFilter`2.ApplyFilter(IEnumerable`1 entities, FilterInformation filterInformation, Guid partitionId)\r\n at Unify.Product.IdentityBroker.EntityControllerSearchFilter`2.<>c__DisplayClass7_0.<ApplyFilter>b__0(IEnumerable`1 filtered, FilterInformation filter)\r\n at System.Linq.Enumerable.Aggregate[TSource,TAccumulate](IEnumerable`1 source, TAccumulate seed, Func`3 func)\r\n at Unify.Product.IdentityBroker.EntityControllerSearchFilter`2.ApplySearch(SearchInformation searchInformation, IEnumerable`1 entities, Guid partitionId)\r\n at Unify.Product.Plus.LockerEntityController.SearchEntities(Guid partitionId, SearchInformation searchInformation)\r\n at lambda_method(Closure , Object , Object[] )\r\n at System.Web.Http.Controllers.ReflectedHttpActionDescriptor.ActionExecutor.<>c__DisplayClass10.<GetExecutor>b__9(Object instance, Object[] methodParameters)\r\n at System.Web.Http.Controllers.ReflectedHttpActionDescriptor.ExecuteAsync(HttpControllerContext controllerContext, IDictionary`2 arguments, CancellationToken cancellationToken)\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Controllers.ApiControllerActionInvoker.<InvokeActionAsyncCore>d__0.MoveNext()\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Controllers.ActionFilterResult.<ExecuteAsync>d__2.MoveNext()\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Filters.AuthorizationFilterAttribute.<ExecuteAuthorizationFilterAsyncCore>d__2.MoveNext()\r\n--- End of stack trace from previous location where exception was thrown ---\r\n at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()\r\n at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)\r\n at System.Web.Http.Dispatcher.HttpControllerDispatcher.<SendAsync>d__1.MoveNext()"} Unify.Framework.Client.SwaggerException: The HTTP status code of the response was not expected (500).

at Unify.Connect.Web.Client.LockerEntityClient.<SearchEntitiesAsync>d__11.MoveNext()

--- End of stack trace from previous location where exception was thrown ---

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

at Unify.Connect.Web.Client.ProfiledLockerEntityClient.<SearchEntitiesAsync>d__4.MoveNext()<---

Closing as a patch has been provided for 5.3, and was rolled into all UNIFYConnect Cloud deployments.

Errors on LDAP gateway leave open LDAP active connections

Errors on LDAP gateway leave open LDAP active connections

Hi Team,

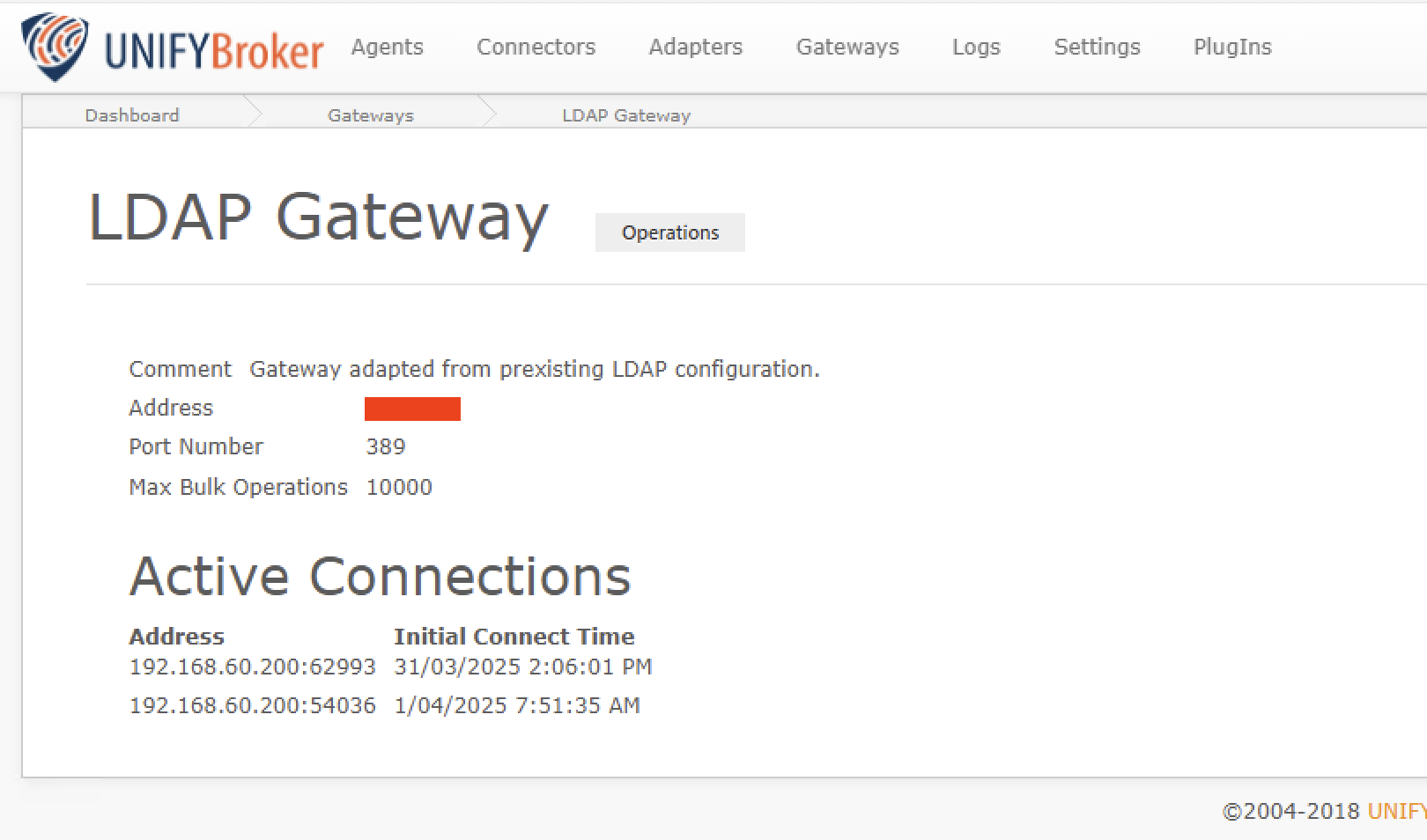

I have been noticing building active connections within UNIFYBroker (v5.3.2) for quite some time. This list continues to build endlessly, sometimes looking back and seeing > 20 active connections in an environment where all operations run sequentially. All LDAP connections are happening from MIM Sync.

I suspect there are 2 issue here, one that is generating the error in the first place, and the second where the connection is remaining open after a failure.

As you can see in the screen shot there is an active connection remaining open at 2:06 PM. This connection is then immediate follow by errors within the UNIFYBroker logs:

" A client has connected to the LDAP endpoint from address: 192.168.60.200:62993."

"An error occurred for gateway LDAP Gateway (1364c700-99c8-40aa-801d-0153427e62a9) on client from 192.168.60.200:62993. More details:

Unify.Product.IdentityBroker.PoorlyConstructedLDAPMessageException: The LDAP server for gateway LDAP Gateway (1364c700-99c8-40aa-801d-0153427e62a9) received a poorly constructed LDAP message and failed with the error: The LDAP message tag is unparsable.

at Unify.Product.IdentityBroker.LDAPConnection.ReadMessage()

at Unify.Product.IdentityBroker.LDAPConnection.TryReadMessage(RfcLdapMessage& message, RfcLdapResult& error)"

" Handling of LDAP extended request.

Handling of LDAP extended request from user Anonymous on connection 192.168.60.200:62993 failed with error "Authentication failed because the remote party has closed the transport stream.". Duration: 00:00:00.1018681."

"An error occurred for gateway LDAP Gateway (1364c700-99c8-40aa-801d-0153427e62a9) on client from 192.168.60.200:62993. More details:

Internal Server Error #11: System.Security.Authentication.AuthenticationException: Authentication failed because the remote party has closed the transport stream.

at System.Net.Security.SslState.StartReadFrame(Byte[] buffer, Int32 readBytes, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartReceiveBlob(Byte[] buffer, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.CheckCompletionBeforeNextReceive(ProtocolToken message, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ProcessReceivedBlob(Byte[] buffer, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartReceiveBlob(Byte[] buffer, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.CheckCompletionBeforeNextReceive(ProtocolToken message, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ProcessReceivedBlob(Byte[] buffer, Int32 count, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.StartReceiveBlob(Byte[] buffer, AsyncProtocolRequest asyncRequest)

at System.Net.Security.SslState.ForceAuthentication(Boolean receiveFirst, Byte[] buffer, AsyncProtocolRequest asyncRequest, Boolean renegotiation)

at System.Net.Security.SslState.ProcessAuthentication(LazyAsyncResult lazyResult)

at Unify.Product.IdentityBroker.LDAPConnectionSecurityExtensions.TLSHandshake(ILDAPConnection connection, IRfcLdapMessage message, ISecurityEngine securityEngine)

at Unify.Product.IdentityBroker.StartTLSRequestHandlerSecurityDecorator.HandleRequest(IRfcLdapMessage message, CancellationToken token, Action`1 postAction)

at Unify.Product.IdentityBroker.LDAPRequestHandlerSecurityDecorator.HandleRequest(IRfcLdapMessage message, CancellationToken token, Action`1 postAction)

at Unify.Product.IdentityBroker.LDAPConnection.d__35.MoveNext()"

CSV Export for Entity Searches

CSV Export for Entity Searches

Hi,

Occasionally we may need to export entity data from Connectors, Adapters etc. to assist with investigating or troubleshooting an issue. I've found that it can be difficult to export information from entity searches. The only way I've really come across is to manually highlight the rows in the entity search and copy across to a spreadsheet to refine.

Just checking if there is an easier way to export entities particularly from refined searches where we may not want all the data? I've considered using the test harness but that only provides a dump from a Connector and is not really useful for Adapter or Locker entity searches.

Otherwise, would it be possible to include a feature to have the ability to export a CSV of data from the current entity search?

Look forward to hearing your thoughts and feedback.

Thanks

UNIFYBroker service failing to start

UNIFYBroker service failing to start

Version v5.3.2 Revision #0

After performing the following sequence of events, the UNIFYBroker service failed to start.

1. Clearing the entities from a decent sized Connector and associated Adapter (~10,000 entities)

2. Deleting the Connector and Adapter

3. Restarting the the UNIFYBroker service

When failing to start the event log would show 2 errors (I know the second stack track looks like I've truncated it, but that is the full error I receive in the log):

Error - The following occurred in module: Identity Broker

The following occurred in the Error module during the Identity Broker cycle of the server: start

Service cannot be started. Unify.Framework.UnifyServiceStartException: The DELETE statement conflicted with the REFERENCE constraint "FK_Entity_ObjectClass". The conflict occurred in database "Unify.IdentityBroker", table "dbo.Entity", column 'ObjectClassId'.

The statement has been terminated. ---> System.Data.SqlClient.SqlException: The DELETE statement conflicted with the REFERENCE constraint "FK_Entity_ObjectClass". The conflict occurred in database "Unify.IdentityBroker", table "dbo.Entity", column 'ObjectClassId'.

The statement has been terminated.

at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection, Action`1 wrapCloseInAction)

at System.Data.SqlClient.TdsParser.ThrowExceptionAndWarning(TdsParserStateObject stateObj, Boolean callerHasConnectionLock, Boolean asyncClose)

at System.Data.SqlClient.TdsParser.TryRun(RunBehavior runBehavior, SqlCommand cmdHandler, SqlDataReader dataStream, BulkCopySimpleResultSet bulkCopyHandler, TdsParserStateObject stateObj, Bo...

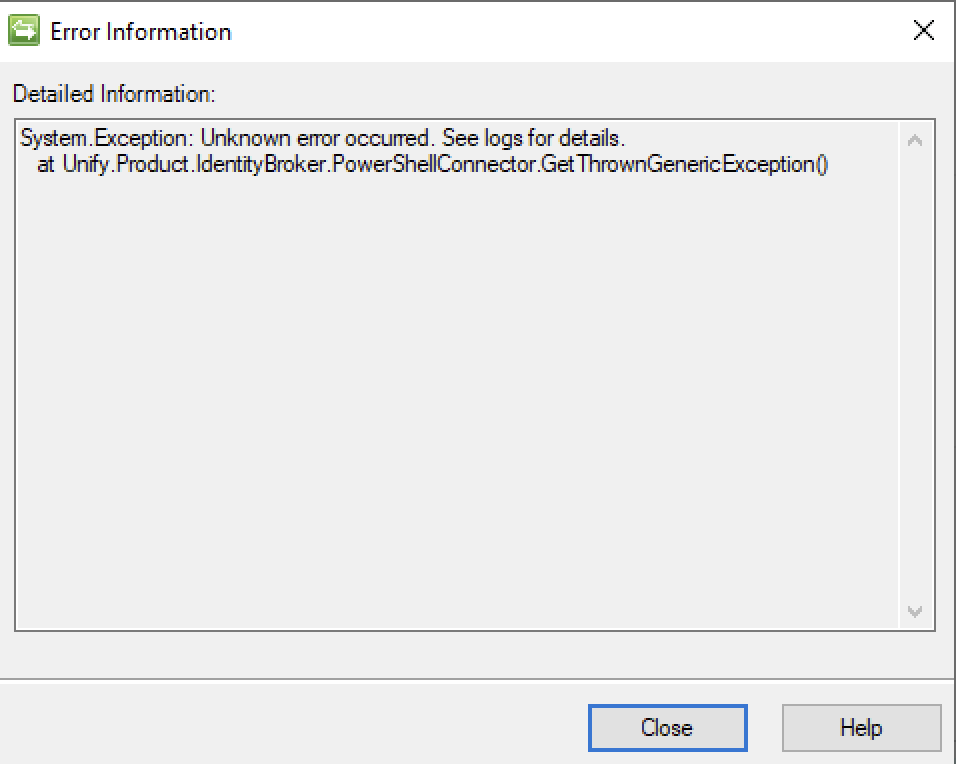

Return error to MIM when exporting via a PowerShell connector

Return error to MIM when exporting via a PowerShell connector

Is there a way to return an error to MIM for individual entities while exporting via a PowerShell connector? My export script uses the standard try/catch and pushes failures where an entity fails to export, eg:

try{

#Export entity

}catch{

$components.Failures.Push($entity);

}

Though when an entity fails it returns a very generic error to MIM.

Is there a way to return a more descriptive along with each of the individual failures?

Register Contributions where the contributing attribute is from a joining connector?

Register Contributions where the contributing attribute is from a joining connector?

Is it possible to call register-contribution in an adaptor PowerShell schema, where the source field is not from the base connector, but a joining connector?

I have been working on fixing the Register-contribution functions in a customer environment, however some of the values that are imported and are eventually used for time offset flag calculations, are coming from a non-base connector using the "Join on" transformation.

When I test using an imported change from the base connector, the changes are schedule for the correct time. When I test using an imported change a joining connector, my test fails (the imported change flows to the adaptor but does not appear to register the future change). This leads me to the conclusion that maybe an imported change from joining connector does not register future changes.

Is this correct? Is there a solution given termination/end dates required for some of the calculations, are not currently available on the base connector?

This has been implemented and is available in the release of UNIFYConnect V6, which will be made available shortly.

A patch for 5.3 is available on request.

PowerShell group connector returning null for dn attribute

PowerShell group connector returning null for dn attribute

Version v5.3.2 Revision #0

I have a PowerShell connector that queries and database to build groups including their memberships. But when importing it returns the following error. The connector does not have an associated adapter.

I have another connector that uses the same script that works just fine, which would indicate a data issue however, there are no obvious fields that are null.

Connector Processor Connector processing failed.

Connector Processing page 1 for connector Test Group Errors failed with reason Value cannot be null.

Parameter name: dn. Duration: 00:00:18.4144781.

Error details:

System.ArgumentNullException: Value cannot be null.

Parameter name: dn

at Unify.Framework.IO.DistinguishedName.op_Implicit(DistinguishedName dn)

at Unify.Product.IdentityBroker.Repository.EntityDistinguishedNameValueDataUtility`1.ConvertValueToString(DistinguishedNameValue value)

at Unify.Product.IdentityBroker.Repository.StringBasedValueDataUtilityBase`2.SetEntityValue(__EntityValueInsertRow dataValue, TValue value)

at Unify.Product.IdentityBroker.Repository.EntitySingleValueDataUtilityBase`2.CreateEntityValue(TEntityKey key, IValue value, IEntityCollectionKeyUtility`1 collectionKeyUtility, EntityDataSet set, __EntityInsertRow row, EntityDataContext sourceContext)

at Unify.Product.IdentityBroker.Repository.KnownEntityContextBase`4.ConvertEntityValueToDataValue(KeyValuePair`2 entityValueAndKey, __EntityInsertRow row, EntityDataSet entityDataSet, EntityDataContext sourceContext)

at Unify.Product.IdentityBroker.Repository.KnownEntityContextBase`4.<>c__DisplayClass33_0.b__0(KeyValuePair`2 entityValueAndKey)

at System.Linq.Enumerable.WhereSelectEnumerableIterator`2.MoveNext()

at System.Linq.Enumerable.d__17`2.MoveNext()

at Unify.Framework.Visitor.Visit[T](IEnumerable`1 visitCollection, Action`2 visitor)

at Unify.Product.IdentityBroker.Repository.KnownEntityContextBase`4.InsertItems(ISet`1 addedItems, EntityDataContext sourceContext, SqlConnection connection)

at Unify.Framework.Data.LinqContextConversionBase`4.SubmitChanges()

at Unify.Product.IdentityBroker.SaveChangedEntitiesTransformationUnit.Transform(IDictionaryTwoPassDifferenceReport`4 input)

at Unify.Product.IdentityBroker.ConnectorEntityChangeProcessor.ProcessEntities(IEnumerable`1 connectorEntities, IEnumerable`1 repositoryEntities, IEntityChangesReportGenerator`2 reportGenerator)

at Unify.Product.IdentityBroker.RepositoryChangeDetectionWorkerBase.PerformChangeDetectionOnConnectorEntityPage(IEnumerable`1 connectorEntities, Int32& index, Int32 entitiesProcessedSoFar, IEntityChangesReportGenerator`2 reportGenerator, IHashSet`1 seenKeys)

at Unify.Product.IdentityBroker.RepositoryChangeDetectionWorkerBase.<>c__DisplayClass11_1.b__0(IEnumerable`1 page)

at Unify.Framework.Visitor.ThreadsafeVisitorEvaluator`1.ThreadsafeItemEvaluator.Evaluate()

Change detection engine Change detection engine import all items failed.

Change detection engine import all items for connector Test Group Errors failed with reason An error occurred while evaluating a task on a worker thread. See the inner exception details for information.. Duration: 00:00:49.7519745

Error details:

Unify.Framework.EvaluatorVisitorException: An error occurred while evaluating a task on a worker thread. See the inner exception details for information. ---> System.ArgumentNullException: Value cannot be null.

Parameter name: dn

at Unify.Framework.IO.DistinguishedName.op_Implicit(DistinguishedName dn)

at Unify.Product.IdentityBroker.Repository.EntityDistinguishedNameValueDataUtility`1.ConvertValueToString(DistinguishedNameValue value)

at Unify.Product.IdentityBroker.Repository.StringBasedValueDataUtilityBase`2.SetEntityValue(__EntityValueInsertRow dataValue, TValue value)

at Unify.Product.IdentityBroker.Repository.EntitySingleValueDataUtilityBase`2.CreateEntityValue(TEntityKey key, IValue value, IEntityCollectionKeyUtility`1 collectionKeyUtility, EntityDataSet set, __EntityInsertRow row, EntityDataContext sourceContext)

at Unify.Product.IdentityBroker.Repository.KnownEntityContextBase`4.ConvertEntityValueToDataValue(KeyValuePair`2 entityValueAndKey, __EntityInsertRow row, EntityDataSet entityDataSet, EntityDataContext sourceContext)

at Unify.Product.IdentityBroker.Repository.KnownEntityContextBase`4.<>c__DisplayClass33_0.b__0(KeyValuePair`2 entityValueAndKey)

at System.Linq.Enumerable.WhereSelectEnumerableIterator`2.MoveNext()

at System.Linq.Enumerable.d__17`2.MoveNext()

at Unify.Framework.Visitor.Visit[T](IEnumerable`1 visitCollection, Action`2 visitor)

at Unify.Product.IdentityBroker.Repository.KnownEntityContextBase`4.InsertItems(ISet`1 addedItems, EntityDataContext sourceContext, SqlConnection connection)

at Unify.Framework.Data.LinqContextConversionBase`4.SubmitChanges()

at Unify.Product.IdentityBroker.SaveChangedEntitiesTransformationUnit.Transform(IDictionaryTwoPassDifferenceReport`4 input)

at Unify.Product.IdentityBroker.ConnectorEntityChangeProcessor.ProcessEntities(IEnumerable`1 connectorEntities, IEnumerable`1 repositoryEntities, IEntityChangesReportGenerator`2 reportGenerator)

at Unify.Product.IdentityBroker.RepositoryChangeDetectionWorkerBase.PerformChangeDetectionOnConnectorEntityPage(IEnumerable`1 connectorEntities, Int32& index, Int32 entitiesProcessedSoFar, IEntityChangesReportGenerator`2 reportGenerator, IHashSet`1 seenKeys)

at Unify.Product.IdentityBroker.RepositoryChangeDetectionWorkerBase.<>c__DisplayClass11_1.b__0(IEnumerable`1 page)

at Unify.Framework.Visitor.ThreadsafeVisitorEvaluator`1.ThreadsafeItemEvaluator.Evaluate()

--- End of inner exception stack trace ---

at Unify.Framework.Visitor.ThreadsafeVisitorEvaluator`1.CheckForException()

at Unify.Framework.Visitor.ThreadsafeVisitorEvaluator`1.WaitForCompletedThreads()

at Unify.Framework.Visitor.ThreadsafeVisitorEvaluator`1.Visit()

at Unify.Framework.Visitor.VisitEvaluateOnThreadPool[T](IEnumerable`1 visitCollection, Action`2 visitor, Int32 maxThreads)

at Unify.Product.IdentityBroker.RepositoryChangeDetectionWorkerBase.PerformChangeDetection(IEnumerable`1 connectorEntities)

at Unify.Product.IdentityBroker.ChangeDetectionImportAllJob.ImportAllChangeProcess()

at Unify.Product.IdentityBroker.ChangeDetectionImportAllJob.RunBase()

at Unify.Framework.DefinedScopeJobAuditTrailJobDecorator.Run()

at Unify.Product.IdentityBroker.ConnectorJobExecutor.<>c__DisplayClass30_0.b__0()

at Unify.Framework.AsynchronousJobExecutor.PerformJobCallback(Object state)

Thanks for the update Hayden. I was just about to respond - it seems like there was a 'not-quite-null' value trying to be parsed into a DN field, which then when Broker was trying to store it in the entity context couldn't grab a valid string value to actually store. Some types in Broker, including the DN type, will treat an empty value differently to a null value - so if anything other than null is seen, it will attempt to convert (and in this case, fail).

Reflect change entities to adapter errors about duplicate entries when there are are no duplicate key values in the connector.

Reflect change entities to adapter errors about duplicate entries when there are are no duplicate key values in the connector.

I've been having issues on particular PowerShell connectors/adapters in UNIFYBroker where reflecting change entities to the adapter is complaining about duplicate entries when there are are no duplicate key values in the connector.

The schema setup between the connectors and adapters is an ID key in the connector that is then used within the adapter as the DN. So it is a very simple DN template. E.g:

Name Type Key Read-only Required

AccountName String True True True

Distinguished Name Template CN=[AccountName]

The issues are generally fixed by clearing and repopulating the whole adapter, which is not a repeatable solution since it happens on a weekly basis, sometimes more often.

These errors also don't seem to happen after an obvious failures on the connector side, which is what I have previously attributed these issues to. All these connectors have deletion thresholds setup of at least 50%.

Its like broker seems to get itself tied up even though the schedules in the environment have been reduced to the point where only 1 operation is interacting within and with broker at a time.

SQL maintenance is also performed frequently and the SQL instance has plenty of resources allocated.

Version details: v5.3.2 Revision #0

Any help would be appreciated as this has been a long ongoing issue that I've seen across multiple environments.

Adapter

Adapter eb42757f-2f23-4228-928e-993942b0c050 page errored on page reflection. Duration: 00:00:21.5551444. Error: Unify.Framework.UnifyDataException: Duplicate DNs detected on adapter eb42757f-2f23-4228-928e-993942b0c050. Reflection failed. Duplicate DNs: CN=<obfuscated name>,OU=sIAMGroups,DC=IdentityBroker, CN=<obfuscated name 2>,OU=sIAMGroups,DC=IdentityBroker.

at Unify.Product.IdentityBroker.DuplicateDnDetector.DetectDuplicateDns(IDictionaryTwoPassDifferenceReport`4 report)

at Unify.Product.IdentityBroker.Adapter.ReflectChangesInner()

at Unify.Product.IdentityBroker.Adapter.ReflectChanges()

at Unify.Product.IdentityBroker.AdapterAuditingDecorator.ReflectChanges()

at Unify.Product.IdentityBroker.AdapterNotifierDecorator.ReflectChanges()

at Unify.Product.IdentityBroker.ReflectAdapterOnChangeDueJob.b__9_0(IOperationalAdapter adapter).

Error details:

Unify.Framework.UnifyDataException: Duplicate DNs detected on adapter eb42757f-2f23-4228-928e-993942b0c050. Reflection failed. Duplicate DNs: CN=<obfuscated name>,OU=sIAMGroups,DC=IdentityBroker, CN=<obfuscated name 2>,OU=sIAMGroups,DC=IdentityBroker.

at Unify.Product.IdentityBroker.DuplicateDnDetector.DetectDuplicateDns(IDictionaryTwoPassDifferenceReport`4 report)

at Unify.Product.IdentityBroker.Adapter.ReflectChangesInner()

at Unify.Product.IdentityBroker.Adapter.ReflectChanges()

at Unify.Product.IdentityBroker.AdapterAuditingDecorator.ReflectChanges()

at Unify.Product.IdentityBroker.AdapterNotifierDecorator.ReflectChanges()

at Unify.Product.IdentityBroker.ReflectAdapterOnChangeDueJob.b__9_0(IOperationalAdapter adapter)

Adapter Request to reflect change entities of the adapter.

Request to reflect change entities of the eMinerva Student: Groups (eb42757f-2f23-4228-928e-993942b0c050) adapter errored with message: Duplicate DNs detected on adapter eb42757f-2f23-4228-928e-993942b0c050. Reflection failed. Duplicate DNs: CN=<obfuscated name>,OU=sIAMGroups,DC=IdentityBroker, CN=<obfuscated name 2>,OU=sIAMGroups,DC=IdentityBroker.. Duration: 00:00:59.9516712

Error details:

Unify.Framework.UnifyDataException: Duplicate DNs detected on adapter eb42757f-2f23-4228-928e-993942b0c050. Reflection failed. Duplicate DNs: CN=<obfuscated name>,OU=sIAMGroups,DC=IdentityBroker, CN=<obfuscated name 2>,OU=sIAMGroups,DC=IdentityBroker.

at Unify.Product.IdentityBroker.DuplicateDnDetector.DetectDuplicateDns(IDictionaryTwoPassDifferenceReport`4 report)

at Unify.Product.IdentityBroker.Adapter.ReflectChangesInner()

at Unify.Product.IdentityBroker.Adapter.ReflectChanges()

at Unify.Product.IdentityBroker.AdapterAuditingDecorator.ReflectChanges()

at Unify.Product.IdentityBroker.AdapterNotifierDecorator.ReflectChanges()

at Unify.Product.IdentityBroker.ReflectAdapterOnChangeDueJob.b__9_0(IOperationalAdapter adapter)

Closed as no further information provided.

UNIFYConnect update threshold/safety catch

UNIFYConnect update threshold/safety catch

A UNIFYConnect customer has requested the ability to implement a safety catch feature to stop updates if they are over a certain threshold. I know that Broker/BrokerPlus has a safety catch feature for entity deletion thresholds, but does anything currently exist for updates? Ideally this would be a check that if the number of changes on a link are over X amount it stops the sync, disables the schedules for that link, and then throws an error in the logs to be picked up via the monitoring/alerting.

The only topic I could find on this at the moment is Safety Catch Feature / UNIFYBroker Forum / UNIFY Solutions.

Update Threshold / Safety Catch now available with Channels feature on UNIFYConnect v7.0.195159

Customer support service by UserEcho